One of the things that we take for granted on IBM i is the ability of this operating system to manage memory usage thanks to its virtual memory management—single-level storage, as we call it.

IBM i Performance Adjustment

Single-level storage has many benefits. It frees your application programs and their data from having to be managed in a shared memory space. The operating system takes care of loading programs and data into main storage (RAM) from auxiliary storage (disk) in appropriate chunks so that the processor can do its job.

However, this moving of programs and data from disk can cause an I/O bottleneck, which leaves you in a quandary. How much memory do you allocate to the work that you need IBM i to do? Where do you change this? How can you monitor the results? This scenario has been referred to as the “black art” of performance adjustment in IBM i circles, but there’s no need to fear.

We’ll start with the “Work with System Status” command WRKSYSSTS (or DSPSYSSTS if you don’t have authority to the work command). Execute the command and follow the results using the guidelines below.

Terminology, Pool Architecture, and Recommendations

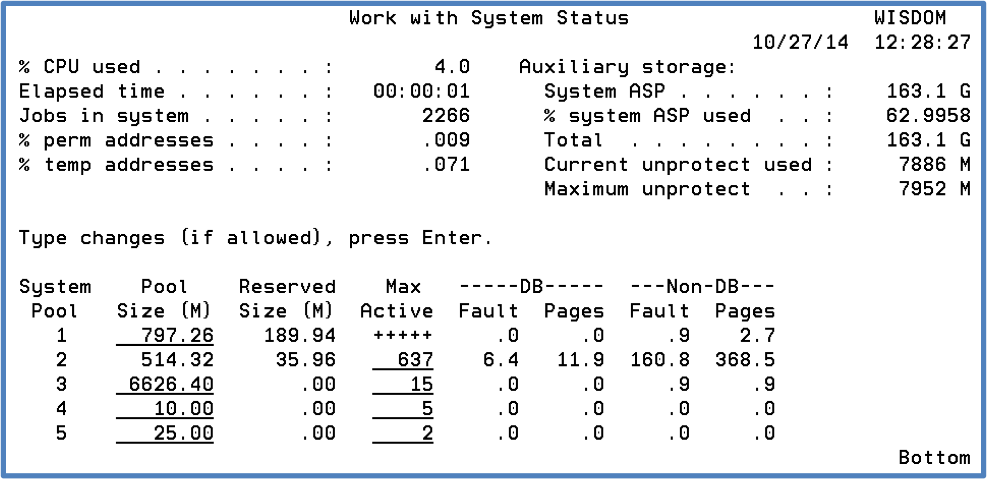

Let’s explain what you’ll see on the WRKSYSSTS panel and how to interpret the data (see figure 1).

Figure 1: The WRKSYSSTS command displays IBM i performance information.

System memory pools are numbered 1 to 64. Add the total in this column and that is the total memory on the system. Typically, there are four pre-defined memory pools on your system:

- System Pool 1 is the *MACHINE pool where the operating system and LIC operate.

- System Pool 2 is the *BASE pool where all unused memory is placed and where, by default, many vendor and user applications run.

- System Pool 3, System Pool 4, and above can change based on the order in which your subsystems start up. For instance, if your QINTER subsystem starts first, it will be assigned as System Pool 3 and labeled *INTERACT. If QSPL starts up next, then it will be System Pool 4 and assigned as *SPOOL.

Hopefully, you or your previous administrators or application providers created additional pools to separate the types of work on your system. Workloads that are similar should be routed into shared pools with similar activities. For instance, batch work could all be routed into its own pool so as not to interfere with your web services activity. Initial pool sizes are assigned using system values, the WRKSHRPOOL screen, and the CHGSBSD command for private pools.

Performance adjusters (i.e., built-in tuners—if turned on—with system value QPFRADJ and set to a 2 or 3, or Robot will adjust memory pools based on demand and threshold values that are set in system values and the WRKSHRPOOL screen. In Robot, we call these performance factors.

Machine Pool – System value QMCHPOOL is the minimum machine pool size. Automatic performance adjusting has been known to adjust the machine pool up to 40% of the total memory on the system due to shared machine and operating system programs. The performance adjuster will attempt to adjust this pool until the total combined database and non-database faulting rate is below 10. It is critical that the faulting rate for this pool be kept below 10 faults per second (see database and non-database faulting below).

Reserved Size (by memory pool) – This value is calculated by the operating system, is for system use only, and cannot be used by jobs. It is critical that enough memory be allocated to the machine pool at the outset so that reserved size does not exhaust it. The QMCHPOOL system value will be changed by the built-in performance adjusters automatically so a manual adjustment should not be necessary.

As a starting point, we recommend these minimum pool sizes for systems with 10 GB main memory or more:

- 7–10% of total memory for *MACHINE (system pool 1)

- 5–8% of total for *BASE memory pool (system pool 2)

- 1 MB per interactive session for *INTERACT (system pool 3)

- 1 MB for *SPOOL (system pool 4)

Custom batch memory pools should be large enough for 5 MB per batch job at a minimum.

Web and Java applications have specific tuning requirements, but typical of those applications are their multi-threaded characteristics. Those processes need special handling and will cause the Max Active Thread requirements to go up. Use WRKACTJOB OUTPUT (*PRINT) or WRKACTJOB F11-twice to see the thread count for those jobs. Your performance tuner will adjust your pool sizes and activity levels based on this type of activity as well.

Max Active Threads (by memory pool) – This is the number of job or task threads that can get a time-slice from the CPU at any one time. This setting is more art than science and can greatly affect the performance of the work on your system. Generally, allowing a large number of jobs to get CPU time (called an activity level) is not good. Performance may actually be better by having a smaller Max Active, allowing its work to get done before moving on to new work.

Paging Option – Also known as expert cache, this value can be set to *CALC for all shared memory pools. System pool 1 must remain as *FIXED. *CALC calculates and changes the read-ahead amount to bring larger and larger chunks of data into main memory—especially for sequential query, report, or batch processing operations—which makes data available to your jobs and avoids faulting. A fault means data is not in main memory and needs to be loaded from disk. *CALC works best for memory pools doing batch work. More on faulting next!

Database and Non-Database Faults – This is also known as a page fault. This value indicates that blocks of memory from disk are being read into main memory. It may be programs (non-database fault) or records from DB2 (database fault).

A high faulting rate will affect system performance. Acceptable faulting rates are not easily calculated without referring to additional IBM documentation. Generally, a high faulting rate indicates poorer system performance and can be adjusted by increasing Pool Size, decreasing Max Active, or both. However, for system pool 1 (*MACHINE), IBM recommends a faulting rate of no more than 10 for combined database and non-database faults. However, other pools can tolerate much higher faulting rates.

Too many active jobs or threads and not enough memory means that programs and data will be moved into and out of main memory onto disk. When this occurs too much, too often, and by many jobs, we call it “thrashing” and it will bring your processing to its knees if you aren’t aware of this activity. Monitoring performance and automatically adjusting memory will help you with this task.

Active to Wait – This measures the job in the active state that is consuming data and using CPU. It goes into a short wait status (such as for disk I/O) but keeps its activity level, which allows it to continue to access CPU time. When a job has a long wait (such as for a tape drive response or input from a user), it loses its activity level, which is then given to another job or thread.

Wait to Ineligible – Jobs that use up their CPU time slice, have a long wait for a system resource, or lose their activity level go to an ineligible status. The OS prioritizes them based on run priority and several other factors; eventually, when an activity level opens up again, they get additional CPU cycles. The process repeats until complete.

Generally, IBM says that if the Wait to Ineligible is always zero, your activity level for that pool might be set too high and the pool is not using all its activity levels. Another general rule: your Wait to Ineligible should not be more than 20% of your Active to Wait value. If the ratio is too high, too many jobs are being paged out to auxiliary storage and back in.

Active to Ineligible – This shows how often jobs are moved to the ineligible queue without getting an activity level. The number should be as low as possible without being zero. If it is always zero, then there is always an available activity level, which means the pool’s activity level is set too high.

Your Performance Adjustment Ally

There are two Robot tools in the performance category that help you with your system memory tuning and performance monitoring. The first is Robot Autotune, which is effectively a replacement for the built-in tuner with additional features for tracking memory demand, isolating batch work, and automatically throttling down offending work on your system.

The second is Robot Monitor peformance and application monitoring software, which can monitor over 300 different performance factors on your system, including disk space, job count, CPU utilization, disk busy, and memory pools. Thresholds can be set for performance monitoring and notifications sent out immediately if exceeded.

Get Started

If you’ve never looked at the memory usage on your system and just throw money at your performance issues (more memory, faster or SSD disk units, etc.), take a look at breaking out your work and adjusting pools appropriate to your environment. And check out Robot Monitor to get the best performance out of your system…and no surprises.