Introduction

Both server capacity planning and network capacity planning are important disciplines for managing the efficiency of any data center. The focus of this eBook is on server capacity planning.

Traditionally, server capacity planning is defined as the process by which an IT department determines the amount of server hardware resources required to provide the desired levels of service for a given workload mix for the least cost. The capacity planning discipline grew up in the mainframe environment where resources were costly and it took a considerable amount of time to upgrade. As the data center transitioned to a distributed environment using UNIX, Linux, and Windows servers, over-provisioning and the introduction of cheap new boxes served as a replacement for capacity planning. However, as the distributed data center matures we have found these practices to be less than optimal.

The average utilization on many servers in the distributed data center is way below acceptable maximums, wasting costly resources. Further, as the economy evolves, IT is expected to do its share to become more efficient, both in terms of hardware and human resources. And finally, IT is now becoming an accepted partner in the organization’s drive to grow revenue while increasing profits and remaining competitive for the long term. All of these factors require more mature IT processes, including the use of capacity planning, a core discipline every company should adopt.

There are a number of types of capacity planning, including the following:

- Capacity benchmarking

- Capacity trending

- Capacity modeling

Benchmarking, or load testing, is perhaps the most common, but also the most expensive. The idea is, you set up a configuration and then throw traffic at it to see how it performs. To do this right, you need access to a fully-configured version of the target system, which oftentimes makes benchmarking or load testing impractical, to say the least.

Linear trend analysis and statistical approaches to trending can provide quick and dirty ways to predict when you will need to do something about performance, but they don’t tell you what you should do to optimally respond. Trending does not provide a way to evaluate alternative solutions to an impending problem, nor to understand problems unrelated to trending, e.g. server consolidation or adding an application.

That leaves modeling, which comes in a couple flavors: simulation and analytic modeling. Simulation modeling can be very versatile and accurate, but requires a great deal of set up effort and time. Analytic modeling is fast and is potentially very accurate as well. The beauty of modeling is that you can “test” various proposed solutions to a problem without actually implementing them. This can save a lot of time and money.

This eBook explores the modeling approach to capacity planning. However, regardless of the toolset you use, if any, the key is to adopt mature IT processes, including the strategic use of capacity planning.

This eBook is organized into a series of chapters, with Chapter 2 following this introduction:

-

The Value of Capacity Planning

-

The second chapter is excerpted from a paper1 written by Enterprise Management Associates, an analyst organization focused on the management software and services market. It explains the benefits of capacity planning.

-

-

Capacity Planning Processes

-

In this chapter we discuss capacity planning in context with other generally accepted IT processes, specifically using the ITIL model.

-

-

How to Do Capacity Planning

- This section illustrates a methodology for capacity planning with examples using Vityl Capacity Management.

-

Using Capacity Planning for Server Consolidation

- Server consolidation is a popular capacity planning technique for making IT operations more efficient. This chapter explains server consolidation and provides two real-world examples where capacity planning tools were used in consolidation projects.

-

Capacity Planning and Service Level Management

- This chapter discusses key aspects of service level management and the relationship between service level management and capacity planning.

-

Bibliography

- This eBook includes material from several resources listed here.

Whether you are learning about capacity planning for the first time, or you are an old hand looking for some new points of view on the subject, we hope this eBook will prove useful to you.

2. The Value of Capacity Planning

This chapter is excerpted from a white paper1 by Enterprise Management Associates (EMA), an analyst organization specializing in the management software and services market.

Introduction

The boom-and-bust economy of the past five years has had an unprecedented effect on IT organizations. In the late 1990s and 2000, IT infrastructures grew at a frantic rate, creating a level of capacity and staffing that had never been seen before. Yet, with the economic tailspin of the subsequent three years, most corporations are now seeking new ways to leverage IT to improve business efficiency and automation—while severely restricting IT’s budgets for personnel, training, bandwidth, and equipment. This roller coaster of supply and demand has left many IT managers looking for new, better ways to manage their resources. While previous IT strategies focused primarily on managing growth, today’s IT organizations now are seeking methods to expand the number of applications and services available to support the business while cutting costs at the same time. In the current economy IT is increasingly being asked to do more with less.

In a recent EMA survey, “reclaiming and/or re-purposing hardware and software that is underutilized” was cited as a top priority by 57% of IT executives responding.

From a server perspective, the rise and fall of IT has instigated several shifts in resource management. First, most IT organizations are looking to make better use of existing assets by consolidating underutilized server resources and re-purposing capacity that was purchased during the boom years, but has now been freed up by down-sizing and/or reorganization of corporate business units. In a recent EMA survey, “reclaiming and/or re-purposing hardware and software that is underutilized” was cited as a top priority by 57% of IT executives responding.

Second, IT organizations are re-thinking their previous “brute force” approach to provisioning server capacity. During the boom years, when growth was the top priority, many IT organizations responded to performance problems simply by purchasing additional servers to handle the load. Today, when every IT dollar is critical, this “over-provisioning” approach is viewed as wasteful and inefficient, particularly since downsizing, not growth, is the order of the day. In difficult economic times, IT organizations must be able to measure server utilization in sharp detail, and make the best possible use of every processor cycle.

Lastly, many IT organizations have grown weary of traditional server-by-server resource management efforts and are now evaluating next-generation technologies that would enable servers to pool and dynamically allocate their capacity to fit the specific needs of applications and services. This dynamic allocation of capacity, sometimes called “virtualization,” enables applications to draw capacity as needed from a collective infrastructure, rather than relying on dedicated servers. This virtualization technology could eventually pave the way for a new paradigm known as “utility computing,” in which users and/or applications draw only the capacity they need from a common infrastructure, just as homes or businesses draw only the water or electricity they need each month from their local utility.

How can enterprises re-purpose underutilized capacity, implement structured methods for provisioning servers, and prepare their IT environments for the coming wave of virtualization? The answer to all three questions is the same: capacity planning. By using capacity planning tools and processes, enterprises can measure server utilization trends, analyze future capacity needs, and predict the exact server requirement for a given application, service, or general time period. Some capacity planning toolsets also offer the ability to model the server environment, testing a variety of “what if” scenarios against proposed server configurations to determine the most efficient use of capacity and server resources. In the following section, we examine the capabilities of capacity planning and modeling tools, and evaluate the value that they can deliver to the enterprise.

Key Capacity Planning Value Points

- Reduce or eliminate server over-provisioning

- Identify and repurpose underutilized servers

- Reduce IT operational expenditures

- Reduce server downtime

- Improve server performance and availability

- Improve IT budget accuracy

- Leverage future technologies

The Value Proposition for Capacity Planning

In a difficult economy that has imposed severe restrictions on IT budgets, it may seem counterintuitive to invest in new technology as a means of cost control. Yet, in the case of capacity planning technology, a relatively small investment in software can lead to a very large savings in server hardware and associated costs. While there may be some costs associated with deploying capacity planning tools and processes, the value of that investment is typically extremely high. How is that value achieved? One way is through the reduction—or, in some cases, complete elimination— of server over-provisioning. Capacity planning tools enable IT organizations to benchmark the exact workload that a server handles over time, then project trends in traffic that the server might need to handle in the future. This data, in turn, enables the IT organization to determine the exact server requirements for a particular situation, ensuring that the server environment will have enough capacity at all times. This process contrasts sharply with the “estimated” capacity allocation method in which the IT organization simply guesses at the capacity requirement, then overbuys servers to ensure that there will always be sufficient processor power. In many cases, capacity planning may enable the enterprise to slash its server acquisition budget significantly.

The flip side of this server utilization data is that it helps enterprises to identify underutilized servers and capacity so that they can be consolidated or re-purposed for other applications and services. Capacity planning tools help organizations to identify server resources that might be idle, enabling IT departments to consolidate several applications or services on a single server, or reallocate server processor power to other services that may need the capacity. This is an essential capability in today’s IT organization, where capacity purchased during the “boom years” may be sitting idly while budgets for new server capacity have often been slashed to the bone.

Capacity planning can also help preserve another crucial enterprise resource: IT staffing. Over the course of a server’s life, most enterprises spend more money on maintenance, configuration, and upgrade of servers than they spent to purchase the server in the first place. For every underutilized server, there is a group of IT staff who have been tasked with installing, configuring, and maintaining that server. If server resources can be used more efficiently—either by eliminating servers or by enabling a single server to handle multiple applications—then the cost of IT staffing drops proportionately.

A related benefit of capacity planning is the reduction of server downtime. Under the “estimated” method of capacity allocation, enterprises typically purchase more servers than they need, then simply re-provision their servers when there is a capacity overload. As a result, most IT operations centers are tasked with maintaining and repairing more servers than they can use, yet they still experience outages or system performance problems when an overload forces the addition or hot-swapping of a new server in the environment. By contrast, enterprises that use capacity planning tools generally can anticipate overloads before they occur, thus reducing or eliminating workload-related outages while enabling the IT operations center to reduce the number of servers it must maintain.

Capacity planning can also improve the overall performance and availability of the server environment. While experienced IT staffers may be able to make an educated guess as to their capacity needs at any given time, such guesses are fallible and may not take all contingencies into account. As a result, many IT organizations that do not use capacity planning tools occasionally find their servers oversubscribed, delivering slow response times or timeouts that may slow business processes or cause customer dissatisfaction. With capacity planning tools, however, the enterprise can track trends—or, in some cases, model new applications or services—to anticipate potential performance and availability problems and eliminate them before they occur.

Capacity planning tools can also help deliver another key benefit in the current economy—budget predictability. During the boom years, enterprises could afford to provision their servers on the fly, over-provisioning new services at the outset and then purchasing new capacity when existing servers were oversubscribed. Under today’s tight budgets, however, the ability to predict future server needs is crucial to making intelligent buying decisions. Capacity planning enables the enterprise to develop an educated server purchasing strategy, and helps to reduce the need for “panic purchasing” situations that a server vendor might exploit to charge premium prices for its equipment.

Finally, capacity planning technology is an essential element in the emerging paradigms of system virtualization and utility computing. In order to create an effective pool of capacity to support all necessary applications and services, enterprises must have a way to track utilization and predict future capacity needs. This process of analyzing and projecting capacity requirements will become even more complex when enterprises begin to employ computing “grids” that are designed to support the broad diversity of applications and services used in the enterprise. In the utility computing model, capacity planning will become as important to the enterprise IT organizations as it is to power, telephone, or water utilities today.

The next chapter places capacity planning in the overall context of IT processes.

3. Capacity Planning Processes

The IT Infrastructure Library (ITIL)2 describes a standard set of established processes by which an IT organization can achieve operational efficiencies and thereby deliver enhanced value to an organization. ITIL is most commonly used in Europe, originally having been created for the U.K. government. Because it provides a useful best-practice framework that can be put to use relatively inexpensively, ITIL is growing in popularity as a means to manage IT services within both commercial and governmental organizations.

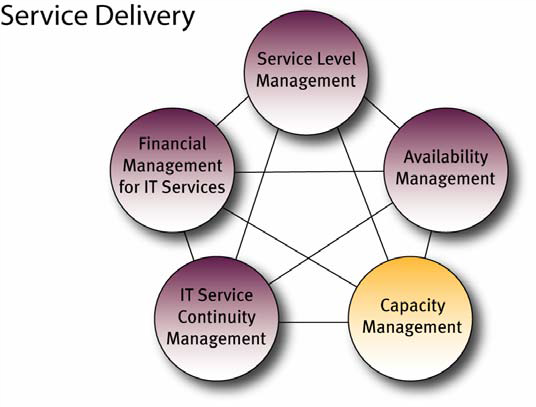

As shown in the following diagram, Capacity Management is part of the Service Delivery area of ITIL. Capacity Planning is part of the ITIL Capacity Management process.

Further, ITIL defines three sub-processes in the Capacity Management process:

- Business Capacity Management: This sub-process is responsible for ensuring that the future business requirements for IT Services are considered, planned and implemented in a timely fashion.

- Service Capacity Management: The focus of this sub-process is management of the performance of live, operational IT Services.

- Resource Capacity Management: The focus in this sub-process is the management of the individual components of the IT infrastructure.

The types of capacity planning that we will cover in this eBook focus on the last two sub-processes, service and resource capacity management. Specifically, when we build a single system model, we are usually performing aspects of resource capacity management rather than service capacity management. This is because services typically span multiple systems. When we combine models from multiple systems, then we are performing aspects of service capacity management.

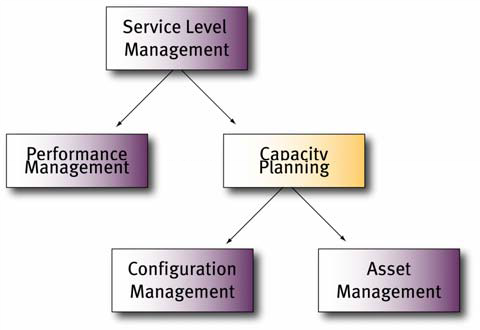

The capacity planning function within capacity management interfaces with many other processes as indicated in the following diagram:

As you can see, service level management is where all good IT processes begin, since this is the crux of the problem. At a minimum, service level management provides service definitions, service level targets, business priorities, and growth plans. Within given architectural constraints, capacity planning determines the cheapest optimal hardware and software configuration that will achieve required service levels, both now and in the future. Capacity planning then feeds configuration and asset management to make this all a reality so that service delivery can begin. On another process branch, performance management verifies capacity plans and service levels and provides revised input into capacity planning when necessary.

The next chapter provides a high-level approach capacity planning best practices.

4. How to Do Capacity Planning

It is very common for an IT organization to manage system performance in a reactionary fashion, analyzing and correcting performance problems as users report them. When problems occur, hopefully system administrators have tools necessary to quickly analyze and remedy the situation. In a perfect world, administrators prepare in advance in order to avoid performance bottlenecks altogether, using capacity planning tools to predict in advance how servers should be configured to adequately handle future workloads.

The goal of capacity planning is to provide satisfactory service levels to users in a cost-effective manner. This chapter describes the fundamental steps for performing capacity planning. Real life examples are provided using Vityl Capacity Management.

Three Steps for Capacity Planning

In this chapter we will illustrate three basic steps for capacity planning:

- Determine Service Level Requirements—The first step in the capacity planning process is to categorize the work done by systems and to quantify users’ expectations for how that work gets done.

- Analyze Current Capacity—Next, the current capacity of systems must be analyzed to determine how they are meeting the needs of the users.

- Planning for the Future—Finally, using forecasts of future business activity, future system requirements are determined. Implementing the required changes in system configuration will ensure that sufficient capacity will be available to maintain service levels, even as circumstances change in the future.

Determine Service Level Requirements

We have organized this section as follows:

- The overall process of establishing service level requirements first demands an understanding of workloads. We will explain how you can view system performance in business terms rather than technical ones, using workloads.

- Next, we begin an example, showing workloads on a system running a back-end Oracle database.

- Before setting service levels, you need to determine what unit you will use to measure the incoming work.

- Finally, you establish service level requirements, the promised level of performance that will be provided by the IT organization.

Workloads Explained

From a capacity planning perspective, a computer system processes workloads (which supply the demand) and delivers service to users. During the first step in the capacity planning process, these workloads must be identified and a definition of satisfactory service must be created.

A workload is a logical classification of work performed on a computer system. If you consider all the work performed on your systems as a pie, a workload can be thought of as some piece of that pie. Workloads can be classified by a wide variety of criteria:

- who is doing the work (particular user or department)

- what type of work is being done (order entry, financial reporting)

- how the work is being done (online inquiries, batch database backups)

It is useful to analyze the work done on systems in terms that make sense from a business perspective, using business-relevant workload definitions. For example, if you analyze performance based on workloads corresponding to business departments, then you can establish service level requirements for each of those departments.

Business-relevant workloads are also useful when it comes time to plan for the future. It is much easier to project future work when it is expressed in terms that make business sense. For example, it is much easier to separately predict the future demands of the human resources department and the accounts payable department on a consolidated server than it is to predict the overall increase in transactions for that server.

In a nutshell, workload characterization requires you to tell Vityl Capacity Management how to determine what resource utilization goes with which workload. This is done on a per process level, using selection criteria to tell the Vityl framework to determine which processes belong to which workloads.

Determine the Unit of Work

For capacity planning purposes it is useful to associate a unit of work with a workload. This is a measurable quantity of work done, as opposed to the amount of system resources required to accomplish that work.

To understand the difference, consider measuring the work done at a fast food restaurant. When deciding on the unit of work, you might consider counting the number of customers served, the weight of the food served, the number of sandwiches served, or the money taken in for the food served. This is as opposed to the resources used to accomplish the work, i.e. the amount of French fries, raw hamburgers or pickle slices used to produce the food served to customers.

When talking about IT performance, instead of French fries, raw hamburger or pickle slices, we accomplish work using resources such as disk, I/O channels, CPUs and network connections. Measuring the utilization of these resources is important for capacity planning, but not relevant for determining the amount of work done or the unit of work. Instead, for an online workload, the unit of work may be a transaction. For an interactive or batch workload, the unit of work may be a process.

The examples given in this chapter use a server running an appointment scheduling application process, so it seems logical to use a “calendar request” as the unit of work. A calendar request results in an instance of an appointment process being executed.

Establish Service Levels

The next step now is to establish a service level agreement. A service level agreement is an agreement between the service provider and service consumer that defines acceptable service. The service level agreement is often defined from the user's perspective, typically in terms of response time or throughput. Using workloads often aids in the process of developing service level agreements, because workloads can be used to measure system performance in ways that makes sense to clients/users. In the case of our appointment scheduling application, we might establish service level requirements regarding the number of requests that should be processed within a given period of time, or we might require that each request be processed within a certain time limit. These possibilities are analogous to a fast food restaurant requiring that a certain number of customers should be serviced per hour during the lunch rush, or that each customer should have to wait no longer than three minutes to have his or her order filled.

Ideally, service level requirements are ultimately determined by business requirements. Frequently, however, they are based on past experience. It’s better to set service level requirements to ensure that you will accomplish your business objectives, but not surprisingly people frequently resort to setting service level requirements like, “provide a response time at least as good as is currently experienced, even after we ramp up our business.” As long as you know how much the business will “ramp up,” this sort of service level requirement can work.

If you want to base your service level requirements on present actual service levels, then you may want to analyze your current capacity before setting your service levels.

Analyze Current Capacity

There are several steps that should be performed during the analysis of capacity measurement data.

- First, compare the measurements of any items referenced in service level agreements with their objectives. This provides the basic indication of whether the system has adequate capacity.

- Next, check the usage of the various resources of the system (CPU, memory, and I/O devices). This analysis identifies highly used resources that may prove problematic now or in the future.

- Look at the resource utilization for each workload. Ascertain which workloads are the major users of each resource. This helps narrow your attention to only the workloads that are making the greatest demands on system resources.

- Determine where each workload is spending its time by analyzing the components of response time, allowing you to determine which system resources are responsible for the greatest portion of the response time for each workload.

Measure Service Levels and Compare to Objectives

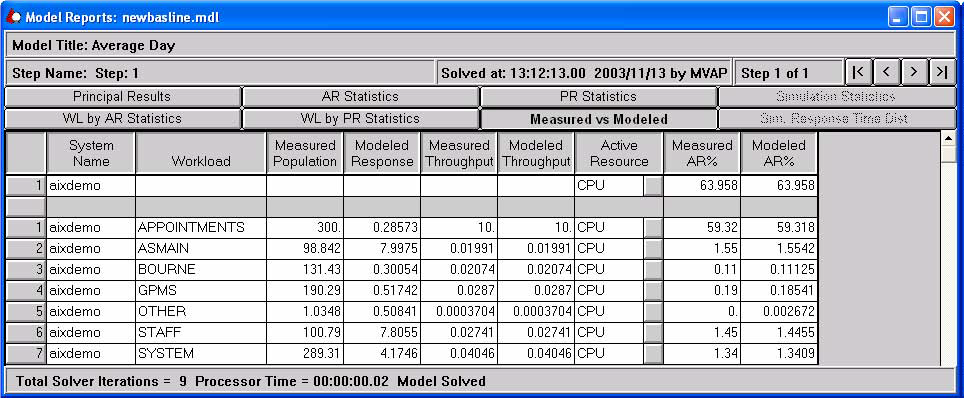

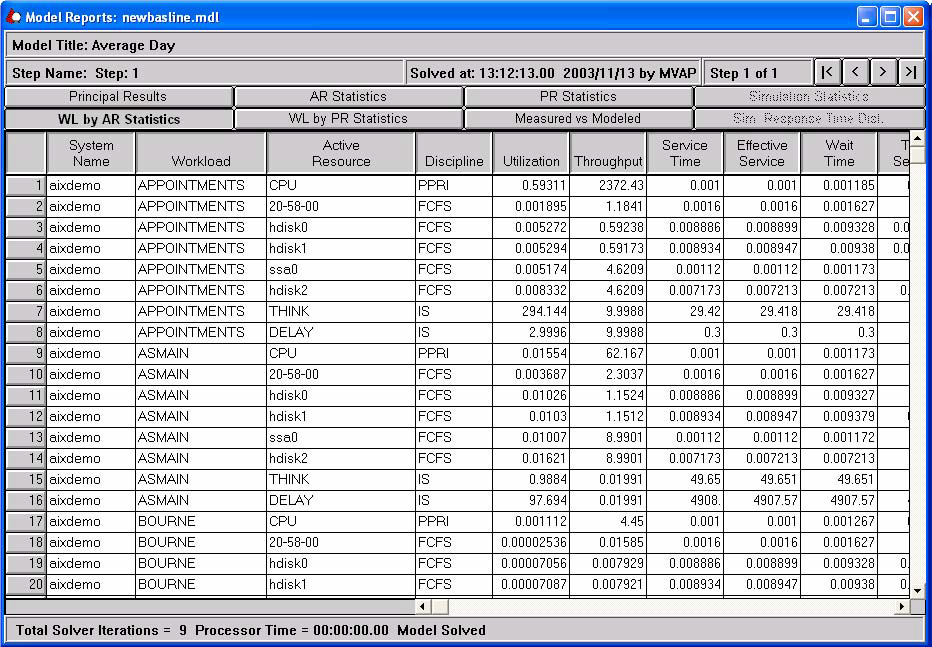

Vityl Capacity Management can help us check measured service levels against objectives. For example, after building a model of our example system for a three-hour window, 7:00 AM – 10:00 AM, the display below (Figure 4-6) shows the response time and throughput of the seven workloads that were active during this time.

By looking at the top line of the table, you can tell that the model has been successfully calibrated for our example system, because the total Measured AR% and Modeled AR% are equal. “AR” stands for “Active Resource.” An active resource is a resource that is made 100% available once it has been allocated to a waiting process. In this case, the active resource is CPU.

Because no changes have been made in the system configuration, modeled response time and throughput for each workload should closely match reality. In our example, the response time means the amount of time required to process a unit of work, which in the case of our application, is an appointment request process. So this report provides us with an appointment request average response time for each of our workloads that we could compare with desired service levels.

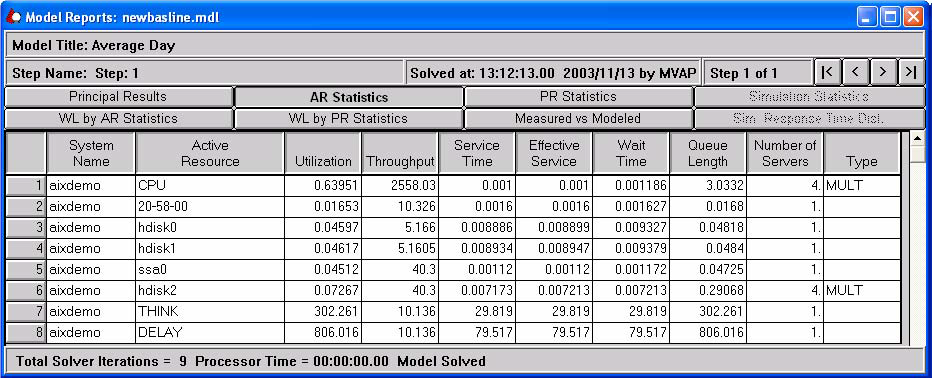

Measure Overall Resource Usage

It is also important to take a look at each resource within your systems to see if any of them are saturated. If you find a resource that is running at 100% utilization, then any workloads using that resource are likely to have poor response time. If your goal is throughput rather than response time, utilization is still very important. If you have two disk controllers, for example, and one is 50% utilized and the other is swamped, then you have an opportunity to improve throughput by spreading the work more evenly between the controllers.

The table above shows the various resources comprising our example server. The table shows the overall utilization for each resource. Utilization for the four CPUs are shown together treated as one resource, otherwise each resource is shown separately.

Notice that CPU utilization is about 64% over this period of time (7:00 AM – 10:00 AM on January 02). This corresponds with the burst of CPU utilization that was shown earlier.

No resource in the report seems to be saturated at this point, though hdisk2 is getting a lot more of the I/O than either hdisk0 or hdisk1. This might be worthy of attention; future increases in workloads might make evening out the disparity in disk usage worthwhile.

Measure Resource Usage by Workload

This figure shows the same period again, only now resource utilization is displayed for the APPOINTMENTS workload. Note that this particular workload is using 59% of the CPU resource, nearly all of the 64% utilization that the previous table showed as the total utilization by all workloads. Clearly, the APPOINTMENTS workload is where a capacity planner would want to focus his or her attention, unless it is known that future business needs will increase the amount of work to be done by other workloads on this system. In our example, that is not the case. Ramp-ups in work are expected mainly for the APPOINTMENTS workload.

The previous charts and tables have been useful for determining that CPU Utilization is likely to be a determining factor if the amount of work that our system is expected to perform increases in the future. Furthermore, we were able to tell that the APPOINTMENTS workload is the primary user of the CPU resources on this system.

This same sort of analysis can work no matter how you choose to set up your workloads. In our example, we chose to treat appointment processes as a workload. Your needs may cause you to set up your workloads to correspond to different business activities, such as a Wholesale Lumber unit vs. Real Estate Development, thus allowing you to analyze performance based on the different requirements of your various business units.

Identify Components of Response Time

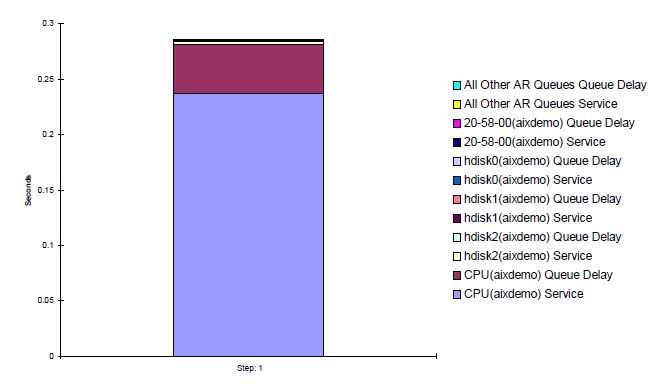

Next we will show how to determine what system resources are responsible for the amount of time that is required to process a unit of work. The resources that are responsible for the greatest share of the response time are indicators for where you should concentrate your efforts to optimize performance. Using Vityl Capacity Management we can determine the components of response time on a workload by workload basis, and you can predict what the components will be after a ramp-up in business or a change in system configuration. A components of response time analysis shows the average resource or component usage time for a unit of work. It shows the contribution of each component to the total time required to complete a unit of work.

The above figure shows the components of response time for the APPOINTMENTS workload. Note that CPU service time comprises the vast majority of the time required to process an appointment. Queuing delay, time spent waiting for a CPU, is responsible for the rest. I/O resources made only a negligible contribution to the total amount of time needed to process each user call.

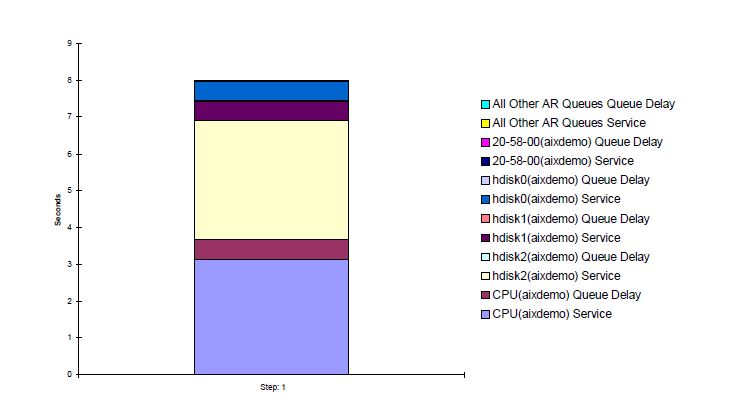

The ASMAIN workload, shown below, is more balanced. There is no single resource that is the obvious winner in the contest for the capacity planner’s attention (however, make note of the queue delay for hdisk2.)

Plan for the Future

How do you make sure that a year from now your systems won’t be overwhelmed and your IT budget over-extended? Your best weapon is a capacity plan based on forecasted processing requirements. You need to know the expected amount of incoming work, by workload, then you can calculate the optimal system configuration for satisfying service levels.

Follow these steps:

- First, you need to forecast what your organization will require of your IT systems in the future.

- Once you know what to expect in terms of incoming work, you can use modeling software to determine the optimal system configuration for meeting service levels on into the future.

Determine Future Processing Requirements

Systems may be satisfying service levels now, but will they be able to do that while at the same time meeting future organizational needs?

Besides service level requirements, the other key input into the capacity planning process is a forecast or plan for the organization’s future. Capacity planning is really just a process for determining the optimal way to satisfy business requirements such as forecasted increases in the amount of work to be done, while at the same time meeting service level requirements.

Future processing requirements can come from a variety of sources. Input from management may include:

- Expected growth in the business

- Requirements for implementing new applications

- Planned acquisitions or divestitures

- IT budget limitations

- Requests for consolidation of IT resources

Additionally, future processing requirements may be identified from trends in historical measurements of incoming work such as orders or transactions.

Plan Future System Configuration

After system capacity requirements for the future are identified, a capacity plan should be developed to prepare for it. The first step in doing this is to create a model of the current configuration. From this starting point, the model can be modified to reflect the future capacity requirements. If the results of the model indicate that the current configuration does not provide sufficient capacity for the future requirements, then the model can be used to evaluate configuration alternatives to find the optimal way to provide sufficient capacity.

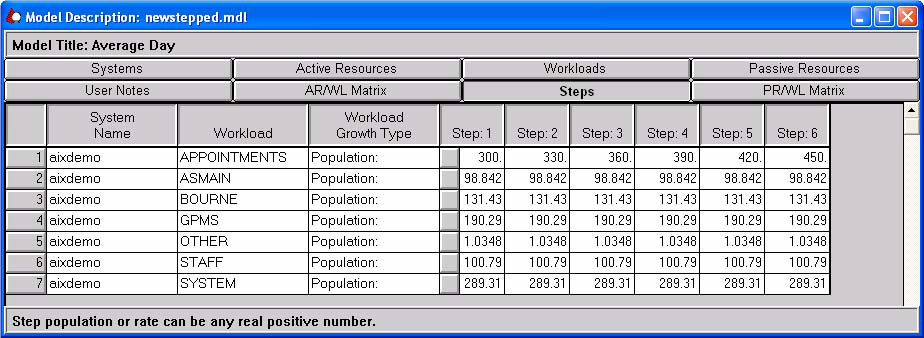

Our base model was representative of 300 users generating appointment/calendar requests on our system. From there we have added “steps” in increments of 30 users until the incoming work has increased by one-half again as much. This ramp-up in work has been added only for the APPOINTMENTS workload, because no such increase was indicated for other workloads when we did our analysis of future processing requirements in the previous section.

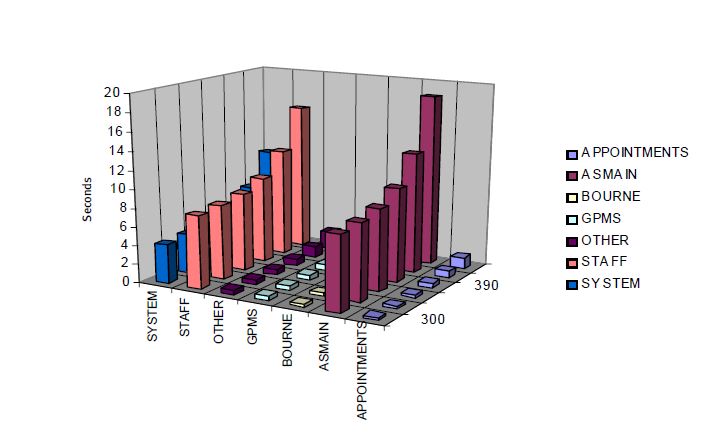

Vityl will predict performance of the current system configuration for each of the steps we have set up. Below is a chart generated using Vityl Capacity Management showing the predicted response time for each workload.

As we can see, the response time starts to elongate after 390 users.

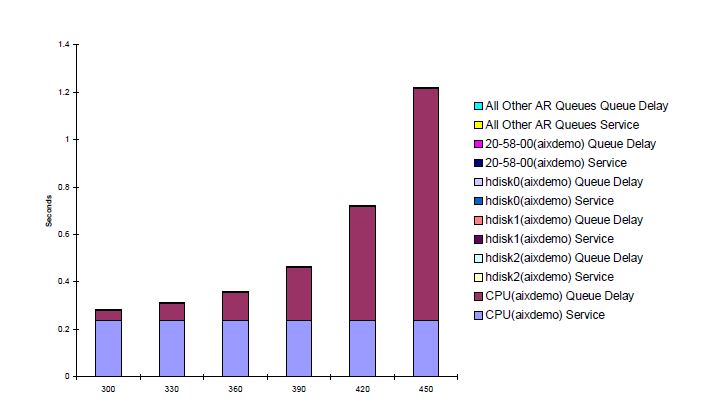

Below is a chart showing predicted response time using a stack bar chart that also shows the components of response time. Notice the substantial increase in CPU wait time after the number of uses reaches 360. It seems that the performance bottleneck is CPU resource.

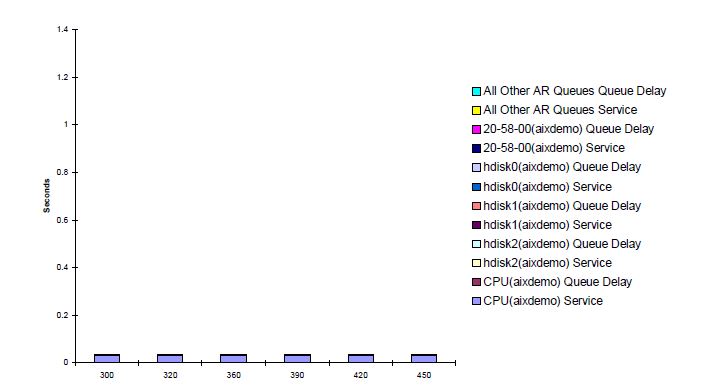

The graph below shows the same stack bar chart, but this time Vityl was told to predict performance if the system involved was changed to a p670 1100Mhz 4-CPU system. Clearly, the newer, faster architecture not only allows us substantial growth, but reduces our overall response time to a more realistic level and still allows us the headroom to experience additional growth if needed.

Capacity Planning Process

In summary, we have shown these basic steps toward developing a capacity plan:

- Determine service level requirements

- Define workloads

- Determine the unit of work

- Identify service levels for each workload

- Analyze current system capacity

- Measure service levels and compare to objectives

- Measure overall resource usage

- Measure resource usage by workload

- Identify components of response time

- Plan for the future

- Determine future processing requirements

- Plan future system configuration

By following these steps, you can help to ensure that your organization will be prepared for the future, ensuring that service level requirements will be met using an optimal configuration. You will have the information necessary to purchase only what you need, avoiding over-provisioning while at the same time assuring adequate service.

The next chapter provides example applications of capacity planning for today’s data center issues.

5. Using Capacity Planning for Server Consolidation

With the right tools, you can locate underutilized capacity and move applications and subsystems to take advantage of that capacity, oftentimes delaying the purchase of additional servers. This is just one of many IT optimization strategies that falls under the definition of “server consolidation.”

This chapter explains server consolidation and provides two real-world examples where performance management and capacity planning tools were used in consolidation projects. These projects saved the organizations involved thousands of dollars in one case, and millions in another, mainly by helping them to use resources they already had on hand.

Server Consolidation Defined

Consolidation by definition is the act that brings together separate parts into a single whole. Typically, server consolidation refers to moving work or applications from multiple servers to a single server. Server consolidation is just one aspect of capacity planning.

Why, Oh Why?

There are many reasons to consolidate servers, but the underlying reason is ultimately, “To save money.” The goal is to lower the Total Cost of Ownership (TCO) information technology and increase Return on Investment (ROI). By increasing efficiency, IT departments can do more with less and make optimal use of what they already have. In a typical server consolidation effort, you can increase efficiency by:

- Reducing complexity

- Reducing staff requirements

- Increasing manageability

- Reducing costs for physical space, hardware systems, and software

- Increasing stability/availability

This chapter will provide examples where server consolidation was used to increase efficiency by:

- Delaying new purchases

- Extending the life of existing servers

- Optimally utilizing existing servers

When mainframes ruled the earth, everything ran on one of a few big boxes. This simplified management. Now even where there are mainframes in an organization there are frequently a great many distributed servers as well. Running many distributed boxes offers a great deal of flexibility, and when business departments “own” those boxes rather than IT departments, they get to have their own playground. Applications can be rolled out without going through a bureaucratic quagmire, and expensive mainframe expertise is not required. This flexibility allows for rapid deployment, but the cost is a management nightmare. Decentralized management and control means a hodge-podge of servers and techniques used to manage them. Management is primarily reactive, oftentimes with data center managers being consulted only after disaster strikes, when problems are far out of hand. Because of this, it is not unusual for the IT department to provide the impetus to consolidate servers. Today there is a general trend toward server consolidation. The idea is to introduce management where chaos previously ruled, reducing complexity and optimizing existing IT resources.

Finding the Answers Can Be Difficult

Server consolidation sounds simple enough, but it is not. How much capacity is available on each server? What resources are underutilized? Is the CPU? What about memory? What happens if work is moved in from another server? Will there be enough resources for both applications? Will I/O be a bottleneck after the move? What will response time be like?

Can more than one application be moved to that server? What are the overall effects of increasing the work on that server? Is there any space for future growth? How long will it be before business workloads increase to the point that the CPU or other resources are over-utilized?

These are all true concerns and legitimate questions when talking about server consolidation, and without the proper tools, finding answers can be difficult.

Predict the Future

The tool of choice for many server consolidation experts is Vityl Capacity Management, which uses analytic modeling based on queuing theory to rapidly evaluate various hypothetical system configurations. It also predicts performance based on projected business growth. Most importantly for this chapter, it can predict how applications will perform after servers are consolidated.

With Vityl Capacity Management you can:

- Measure work being done on each individual server.

- Model effects of consolidating work from multiple servers onto a single server.

- Quickly identify any performance bottlenecks that are likely to be caused by the additional work being done on the server.

- Predict effects of future growth in your business using “what if” scenarios. What if the work grows by “X” percent over “X” amount of time?

- Predict effect on performance of changing hardware configurations. What if another CPU is added? What if disk drives are added, or another controller? What if the make or model of server is changed?

The most important benefit of using Vityl Capacity Management is that you can see the effects of changes before any work or data are actually moved. You can then be more confident regarding the effects of the server consolidation before you make any purchases and before data or applications are moved.

Case Studies

Below are two examples of how server consolidation was used in different situations, saving both companies money. The examples are real, but we have changed the names to protect our customers’ interests. “Company A” used capacity planning and server consolidation to accomplish big cost savings in their disaster recovery/management plans. “Company B” discovered they could make do with existing capacity, and canceled an order for a large server.

Company A – Finding Underutilized Resources for Use as Disaster Backup

A disaster management consultant was hired for Company A. Company A had three locations, one in California, the second in New York, and the third in Kansas City. All three sites used a large SAN environment so that each site had access to the same data. There were a total of some 900 servers. The consultant was to come up with a disaster management plan that would be able to recover 200 of the most critical servers in case of a disaster. 100 of these mission critical servers were located in the California office, 50 in New York and 50 in Kansas City. In case of a disaster, the mission critical servers had to be recovered and operational within a 24-hour time period. This consultant started looking at the workloads and utilizations of each server, and found that there was one business application per server. Upon interviewing system administrators (SA), the consultant learned that Company A had at one point tried running multiple applications per server. This resulted in unacceptably poor performance and the people responsible for the applications pointing fingers at each other.

The company thought they could resolve the problem quickly by putting each individual business application on its own server. This turned out to be a very difficult, time consuming and expensive task. To avoid having to separate applications again in the future, a company-wide policy was instituted requiring each business application be hosted on its own dedicated server.

Hindsight being 20-20, it would have been prudent to have Vityl Capacity Management installed on the servers, helping to analyze performance bottlenecks. It might have been possible to eliminate problems with just a few carefully chosen changes or upgrades. Even better, Vityl Capacity Management could have been used to explore alternative configurations to meet capacity requirements.

Instead, the company chose what appeared to be the most expedient solution to their performance finger-pointing problem; they hosted just one application per server. This is not an uncommon solution, but it usually leads to inefficient use of IT resources.

Using Vityl Capacity Management the disaster management consultant analyzed a few of the larger critical servers and found they were less than 3% to 6% utilized at any given time of the day or night. There were vast amounts of underutilized capacity that could potentially be used as backup in the event of a disaster.

The consultant then used Vityl Capacity Management to evaluate a potential 10-to-1 server consolidation. He modeled 10 of the mission critical servers from California, and moved the work to a mission critical server in Kansas City. The model showed that even with the consolidation of these servers, the server in Kansas City would be less than 71% utilized.

The consultant then did an analysis of the historical growth of the workloads on servers that resided in California for the preceding year. He used what-if analysis capabilities of Vityl Capacity Management to predict the utilization for the next 12 months based on the growth patterns of the previous year. This revealed that after the consolidation and the projected growth for the next 12 months, the server would still be less than 80% utilized.

The consultant convinced management to let him use the server in Kansas City as a backup site for the 10 mission critical servers. He mirrored the individual server from California to the one server in Kansas City, and found performance to be within a small percentage of what had been projected with Vityl Capacity Management.

The consultant is currently modeling the entire enterprise, including all three sites in the analysis. It is quite likely that no new computer resources will be required to provide disaster back-up, resulting in a very significant savings, a very pleasant surprise for upper management.

Company B – Avoiding Unnecessary Expenditures

Company B was a small company with a System Administrator (SA) who was in charge of six servers within his department. One of those servers was leased, and the lease was expiring at the end of the month. The hardware vendor convinced Company B to order the newest, latest, greatest (and most expensive) server on the market. If the new server arrived as scheduled, service levels could be maintained, but the SA was concerned. What would he do if the new server did not arrive before the old leased equipment had to be returned? The work being done on the leased server included an instance of Oracle and two custom applications. Could that processing be temporarily moved to any of the other five servers?

The SA used Vityl Capacity Management to analyze all six servers. He found that no single server would be able to take the entire load of the leased server, but he did find a way to distribute the load over two existing machines. He could move the Oracle instance to a machine that had another small instance of Oracle running on it. If he added two more CPUs to that server, there would be sufficient capacity for the next 12 months with the current growth patterns. He found another server that had enough capacity to host the custom applications without any modifications for the next 12 months.

With sufficient capacity to cover growth over the next 12 months, there was no need for the leased server or the replacement server that had been ordered. The SA quickly took these findings to his management, and the changes were successfully implemented over the next weekend. The order for the new server was cancelled, and the SA received a healthy bonus for saving the company a substantial amount of money.

Conclusion

These are just two examples of how using a good capacity planning tool to perform server consolidation can save money. Company B saved thousands of dollars, and Company A literally saved millions, dramatically demonstrating that performance management and capacity planning is best done with the proper tools. To do otherwise creates risk instead of minimizing it.

To make optimal use of your organization’s IT resources, you need to know exactly the amount of capacity you have available and how that capacity can be utilized to its fullest.

6. Capacity Planning and Service Level Management

In this chapter we discuss service level management and its relationship to capacity planning.

We hear a lot about service level management today, but what do we really mean by it? Below we have definitions of service level management and capacity planning. The service level definition is from Sturm, et al, Foundations of Service Level Management.5

Service Level Management (SLM)

is the disciplined, proactive methodology and procedures used to ensure that adequate levels of service are delivered to all IT users in accordance with business priorities and at an acceptable cost.5Capacity planning

is the process by which an IT department determines the amount of server hardware resources required to provide the desired levels of service for a given workload mix for the least cost.

You can clearly see that these two critical IT processes really share common goals—provide adequate service for the least cost. So how do you really do this? You must employ an integrated approach to service level management and capacity planning, as this section describes. Stated slightly differently:

- Capacity planning requires a firm understanding of required service levels

- Service level management is successful when the necessary IT architecture and assets are available

- Operational processes should incorporate both capacity planning and SLM to maximize success

We will now examine two key aspects of service level management, setting service level agreements and maintaining service levels over time as things change.

Service Level Agreements (SLAs)

Many of the IT organizations we talk to do not have any type of SLA mechanism in place and this is a concern. How can you adequately provision a service if you do not have goals to shoot for? And how can you plan upgrades if you do not know what the business expects for this service over time? We recommend at least recording the following information for each service:

- The business processes supported by the service

- The priority to the business of these processes

- The expected demand for this service and the seasonality, if any, of that demand

- The expected growth in demand for this service over the next three years

- The worst response time or throughput that is acceptable for this service

You do not have to make this process formal with signed agreements, but it is a very good idea for both IT and the affected business units to have a copy of the above information to resolve conflicts should they arise.

With this minimal information, or a more complete and formal SLA if you have it, you are now ready to use capacity planning to ensure performance that is adequate at a minimum cost.

The key SLA questions to answer are:

- What hardware configuration is required for the performance and workload level?

- Can the service be provisioned on existing systems and meet the requirements?

- What hardware upgrade plan must be in place for anticipated business growth?

There are many ways to determine how much hardware capacity you need to deliver a service level. You can perform load testing, but this assumes you can afford exact duplicates in the test lab of the production floor, and load testing fails in a consolidated environment anyway. As an alternative, you can use capacity modeling to determine how much hardware you will need.

About the only way to find out if a service can be provisioned on an existing system with the specified service level is to use capacity modeling. And what about after it is up and running? How do you maintain the service level as workloads grow? When do you need to upgrade, if at all? Capacity modeling is often the best way to know for sure. We will present best practices for doing this in a moment.

Proactive Management of Resources to Maintain Service Levels

Essentially you must answer the following questions:

- Do predicted service levels match real service levels?

- Can we consolidate applications or servers to save money and still provide the required service?

- If growth plans change, what does this mean to our upgrade plan?

How do you manage resources to maintain service levels and minimize costs? First you need to measure service levels and compare to specified and predicted. This requires a performance management system with workload measurement. Are you providing adequate service levels but at the expense of over-provisioning? You can find this out by (a) looking at historical resource utilization for the service and the system and looking for headroom, or (b) by building a model and stressing it to see where it breaks.

If you have a service over-provisioned for now, is it also for the foreseeable future? If yes, you should definitely consolidate or provision a new service here. It is always a good idea to check with business units or other client organizations periodically to verify growth plans in the SLA. What if they recently changed? The best thing to do is get out your model of this service and plug in the revised growth projections and see how your upgrade plan looks now.

Service Level Management Scenarios

The following scenarios illustrate capacity planning applications for service level management.

Provisioning a new service to meet an SLA

- Perform load planning and testing.

- Baseline the test runs.

- Build a model of the test environment.

- Change the model parameters to the production environment.

- Solve the model for the projected workloads.

- Compare predicted response time/throughput to service level parameters.

- Make necessary changes to planned provisioning.

Adding an SLA to an existing service

- Build a model of the production environment.

- Solve the model for the projected workloads.

- Compare predicted response time/throughput to service level parameters.

- Make necessary changes to planned provisioning.

Developing an upgrade plan under an SLA

- Gather projected growth estimates from business units.

- Build a model of the existing service based on current configuration and workload.

- Solve the model for one, two and three year growth projections.

- Compare predicted response times and throughputs to the SLA.

- Use modeling what-if scenarios to find least cost, just-in-time upgrade path.

To summarize, the goals of service level management and capacity planning are tightly interwoven. Both strive to provide adequate levels of service at an acceptable cost. And both are much more successful when implemented together as part of a disciplined and unified process.

Bibliography

1. “The Value Proposition for Capacity Planning,” Enterprise Management Associates, 2003.

2. The Office of Government Commerce web site, http://www.ogc.gov.uk.

3. “How to Do Capacity Planning,” Fortra

4. “Consolidating Applications onto Underutilized Servers,” Fortra

5. Sturm, Rick & Morris, Wayne, Foundations of Service Level Management, SAMS, 2000.

Ready to see how Vityl Capacity Management does capacity planning?

Watch a demo of the software. We'll walk you through the software's capabilities, showing you what Vityl can do for your organization.