Finding the right tools for IT is a challenge because different organizations have varying needs. Identifying your organization's needs when it comes to modeling is key to deciding what capacity planning tool will be right for you.

Here's why:

Anybody with a dartboard can claim they have a capacity planning tool. Unfortunately, companies selling dartboards for capacity planning aren't likely to be very honest about the sophistication or accuracy of their tools. Buyer beware.

If you have an unlimited IT budget, dartboards are fine for IT optimization. But the rest of us need accurate predictions of future workload resource requirements to meet business objectives.

Accuracy in resource capacity planning helps balance IT risk and health.

Too much risk means you could lose revenue and customers from downtime. But you also don’t want to achieve IT health through overspending.

Good capacity planning software will provide the appropriate metrics for both these factors.

So how do you avoid buying a dartboard when what you really need are accurate predictions? What makes a good capacity planning tool? What should a buyer in your position be looking for?

What Is NOT Good Enough

Performance Monitoring

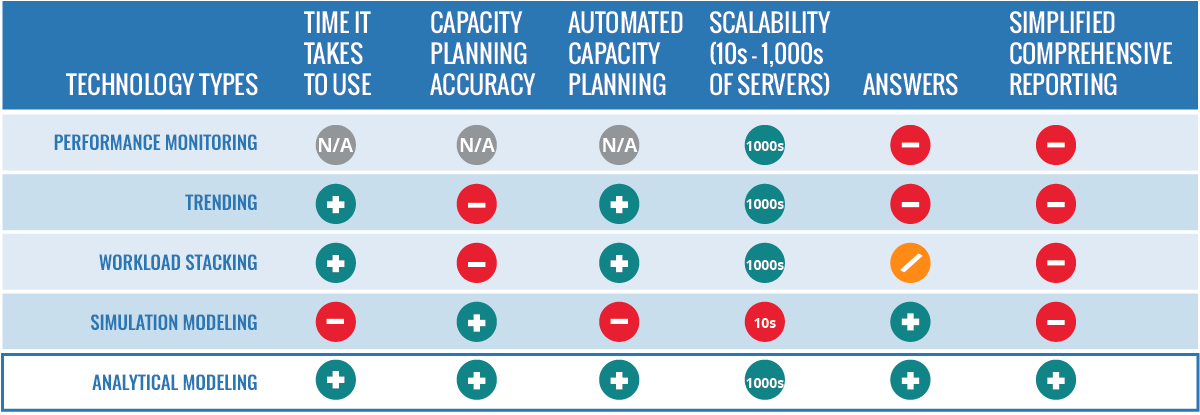

First off, performance monitoring is not the same as capacity planning. Planning involves proactive provisioning for future demand.

Getting an alarm 15 minutes before users complain can only enable you to move reactively. While alarms are essential components for capacity management, they don’t provide visibility into the future.

Trending

Many capacity planners keep historical data and use it to plot trends. And they hope those trends can accurately predict future performance.

While this strategy is better than nothing, you should beware of companies that tout trending as capacity planning. It can lead you into significant risk in many situations.

This strategy assumes that workloads increase at a steady rate—or at a rate based on some statistical formula that isn’t necessarily accurate.

Inaccuracy means trending often collapses when it comes time to add a new workload or consolidate servers. Stacking values isn’t a good practice because it falsely assumes that computer system performance is linear and there isn’t resource contention.

You need your tool to assess more than just past system performance in order to make accurate predictions about the future.

Remember that performance doesn’t scale linearly. Those who use trending as a capacity planning tool simply try to keep utilization rates below a specific threshold like 75 percent.

Understanding the workload behavior provides critical insight to what periods of time are important to base the projection.

This kind of inexact estimation practically guarantees over-provisioning. And companies who do this are often blind to the fact that they’re wasting money or will be at great risk.

Workload Stacking

Be wary of tools that do "capacity planning" for server consolidation with simple math. They do this by adding together the resource utilization of each of the workloads being considered for consolidation.

It's a basic math concept:

- Normalize CPU utilization

- Add the utilization for each workload together

- Determine how much of the target CPU will be utilized after consolidation

A similar calculation is performed for other key components such as memory, IO, and the network.

This kind of simplistic procedure can be effective enough to find potential candidates for consolidation. But it leaves way too much out of the equation to be solely responsible for consolidating important workloads.

The indicators of IT health and risk you would use with this method are artificial and not based on actual behavior. This will inevitably lead to over-provisioning, outages, and other unpleasantness.

You need capacity planning software that understands the details of your server architecture, how much your applications use of that architecture, and how workloads will interact when consolidated.

What You Really Need: Modeling

It’s always been true that the best capacity planning software uses some sort of performance modeling. But traditional modeling can be time-consuming. So capacity planners typically only have enough time to use it for highly critical applications.

Thankfully there are solutions that can automate predictions, allowing you to expand the process to your entire infrastructure. So you can include everything, not just your most important servers. Then the capacity planner can focus their time mitigating risk.

Sometimes when people talk about a "model," they mean a description or diagram. That's not the kind of model we are talking about in this case.

You do need a description of the systems involved. But that description is really just one step in a good capacity planning process.

What you want is a tool that can look at that description along with information regarding the incoming workloads. It can then predict how the systems will perform and identify risk.

There are at least two modeling methods used by capacity planning software to predict performance: simulation modeling and analytic modeling.

Good: Simulation Modeling

A good simulation modeling tool will create a queuing network model based on the system being modeled and simulate running the incoming workloads on that network model.

These simulations can be highly accurate, but a lot of work is needed to adequately describe the systems with enough detail to produce dependable results.

It makes plenty of sense to use flexible simulation models to plan for those “what-if” scenarios.

For example, you might use it to determine how long a proposed investment in CPU infrastructure, i.e. headroom, will last so that you can construct a business case for management.

This is still the preferred method for networks, but it’s so resource-intensive that it’s practically impossible to use as a capacity planning tool for servers and server applications.

For your most critical capacity planning needs, you’ll need something that utilizes queuing theory.

Better: Analytic Modeling

While analytic modeling also takes queuing into account, it doesn’t simulate the incoming workloads on the model.

In a good analytic modeling tool, formulas based on queuing theory are used to mathematically calculate processing times and delays (throughputs and response times).

This type of modeling is much quicker—and not nearly as tedious to set up. The results can be just as accurate as simulation modeling results.

It’s important to pick the right data (e.g. peak online transactions) to model and ensure that it represents the appropriate situations.

When the process of selecting and contextualizing data isn’t automated, you must rely heavily on the skills of the analyst doing the work and run the risk of making mistakes.

Without automation, it becomes extremely easy to miss important data and get inaccurate projections of future needs.

Best: Automated Analytic Modeling

To get the most out of your software, you’ll want it working for you 24/7.

Automated predictive analytics are what capacity planners will find most useful in their day-to-day monitoring of applications and systems. This helps them keep a constant eye on the performance of large numbers of infrastructure elements that serve applications and business services. When risk is identified, the capacity planner can focus on mitigating risk rather than scanning hundreds of reports looking for issues.

Want to cover your bases? Get a tool that can do both analytic and simulation modeling.

So, what makes a good capacity planning tool?

Efficient capacity planning software takes a number of complex factors into account. Learn more about a few of the most important criteria in our Buyers Guide: Capacity Management Tool Checklist.

Interested in Automated Capacity Planning Made Easy?

Vityl Capacity Management may be what you need. Try it free for 30 days.