When companies first start to provision or migrate applications to the cloud, cost management is probably not the first thought on their minds. The main drivers are things like application agility, operational assurance, and alignment of strategic focus. Or they might have thought that moving to the cloud and reducing their need for capital expenditure would automatically save them money. But without doing cost management for their cloud services, many realize they have achieved the opposite and that their bill for IT actually has increased. To little surprise, several recent surveys have identified cloud cost management as one of the top priorities in response to these rising costs.

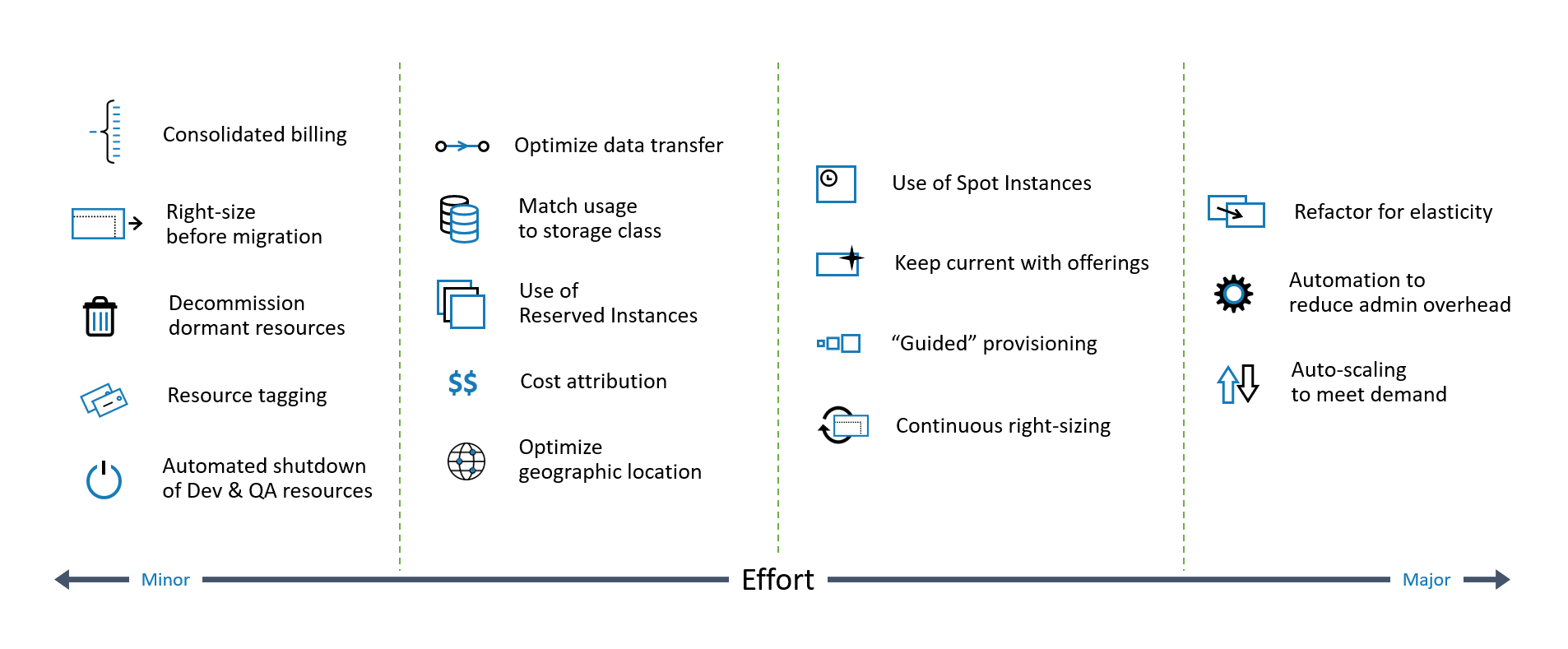

Cost optimization of public cloud services is carried out through a number of different measures, each trying to address a non-optimal usage pattern or exploit cost-saving features of the cloud service used. Out of all of these possible activities, you need to identify which ones that are relevant and applicable to your specific situation and your use of cloud services.

Another important aspect to consider is the effort involved in implementing them. Some are quite simple to apply and should almost be considered mandatory. Others take more effort and you have to carefully weigh the estimated savings to the cost of implementing them. Some involve fundamental changes to how your application is designed or operated. The increased risk associated with the change also needs to be weighed in.

Below is an outline of the different cost-saving activities, classified in terms of the effort involved in implementing them. We’ll start with the activities requiring little effort and work our way up to the ones that require more effort.

Activities for Cloud Cost Optimization

1. Consolidated billing

If your organization is using multiple accounts with a public cloud service like AWS, it will probably make sense to consolidate those accounts to receive a single bill. Doing this will allow you to get a better overview of your total cost structure, and it can also potentially qualify you for a better volume discount with the service provider.

2. Right-sizing before migration

While some workloads need to be refactored before being moved to public cloud, many others are moved without any changes to the architecture of the application. This simple rehosting exercise is often referred to as “lift and shift”. To minimize risk, the size and characteristics of the target instance is selected based on how the workload has been hosted up until now. Unfortunately, this also means that any inefficiencies of the on-prem configuration is brought over to the cloud.

So rather than trying to mimic the existing configuration, migration provides a good opportunity to right-size the instances. By using a tool that allows you to analyze historical behavior of the workload to come up with an optimal configuration based on resource utilization and any seasonal variations you can find considerable savings.

3. Decommission dormant resources

Time-limited initiatives end, and the resources assigned are no longer needed. Decommissioning those resources as soon as possible will obviously have a cost-saving impact. A process for continuous identification and decommissioning of dormant resources, both compute and storage, should be automated and part of your ongoing management process. Proper tagging and a good understanding of seasonal variations will minimize the risk of removing resources by mistake.

4. Resource tagging

Proper tagging of all resources provisioned in public cloud is key to many of these other cloud cost optimization initiatives and is therefore a fundamental requirement.

From a cost tracking perspective, all provisioned resources should be tagged with owner, cost center/business unit, customer, and project. It’s also important to use tags to indicate business service, application role, and environment (dev/test/prod) to enable automated optimization activities.

5. Automated shutdown of Dev & QA resources

Most applications or workloads are not used on a 24/7 basis, especially not in development and quality assurance environments. An inverted follow-the-sun strategy where you automatically shut down your instances during the hours of inactivity will save you considerable cost.

6. Optimize data transfer

In public cloud, networking is virtualized and available in a number of different configurations and types. By matching your networking setup more closely with your needs and optimizing the configuration, you can save cost.

Regardless of cloud provider, there is usually no cost for transferring data into that cloud environment. But transferring it out will cost you. The transfer costs will vary depending on what regions are involved. Major service providers offer dedicated network connection services and content delivery networks (CDN) which can play a major role in keeping your data transfer charges down.

7. Match usage to storage class

Public cloud services offer a multitude of different storage options. They have different characteristics (performance, elasticity, availability, persistence, etc.) and are priced in accordance with the level of service provided. By properly identifying the characteristics and requirements of your applications and picking the optimal alternative you can reduce your cloud storage cost considerably.

8. Use of Reserved Instances

One way to optimize overall public cloud cost is to reduce the unit price. Service providers benefit from committed volumes and an improved ability to plan ahead (just like we do in our own data centers), to an extent where they are willing to give you a considerable discount if you assist them. By committing to future use of an instance type, you can typically get a considerable discount. A reserved instance is not a physical instance; it’s a billing discount applied to the use of on-demand instances in your account and those must match certain attributes to qualify. You pay for the entire term, regardless of actual usage. Agreeing to pay upfront will increase the discount even further.

9. Cost attribution

Allocating IT cost in relation to activity has been a desire for many companies over the years. In traditional on-prem data center environments, this was associated with having to implement instrumentation that could accurately track the activity of different tenants across shared components, aggregating the fully loaded cost including deprecations of capital investments for all resources, overcoming cultural barriers towards internal charges.

In the cloud this changes completely. Paying for what you use is a fundamental mechanism and not relaying that cost to the business units would mean wasting a great opportunity to increase cost awareness. Letting each consumer pay for what they actually use will make them much more considerate about how they request and consume resources, likely having a major impact on total cost.

10. Optimize geographic location

Placing your data close to the users will reduce latency and could also be a requirement to comply with data sovereignty regulations, but it can also have an impact on cost. The different locations offered by service providers operate within local market conditions, and resource pricing can differ. Choosing the optimal location for your resources allows you to run at the lowest possible cost globally.

11. Use of Spot Instances

Using spot instances is a way for cloud providers to monetize their excess capacity by reselling it. These short-lived instances are offered for a very low cost compared to on-demand or reserved instances. The price will vary with the supply and demand.

Given the fact that available spot capacity can’t be guaranteed at a given point in time, spot instances are obviously not suitable for all types of workloads. They can be very useful for time-insensitive batch processing or in situations where you need to temporarily supplement your normal capacity to manage a spike in demand. Under those circumstance they can offer considerable cost savings.

12. Keep current with offerings

In the last years, the level of innovation in the public cloud service space has been staggering. Service providers release new and updated services at a pace that many customers have a hard time keeping up with. But there are good reasons to continuously evaluate available alternatives and be open for adoption. New types of resources, underpinned by new and improved infrastructure components, provide better performance or capacity for the same price. Other times, the software stack you’ve implemented for an application may now be readily available as a prepackaged service, allowing you to reduce your complexity and administrative cost.

13. “Guided” provisioning

One of the main drivers for public cloud adoption is the self-service element and the agility that it brings. Provisioning resources for new initiatives is quicker and can be distributed to a wider group in the organization. But this decentralization also leads to less control and the potential for over-allocation and sprawl. Under those circumstance it’s tempting to implement policies that limit the choices and steer users to choose from a predefined set of “recommended” configurations, keeping the cost in control. It’s important to find the balance between standardization and policies on the one hand (allowing you to optimize, rationalize, and automate the management of your applications) and the improved agility and decentralization self-service leads to on the other hand.

14. Continuous right-sizing

It’s important to analyze the resources assigned to your workloads as they are being migrated to cloud to optimize cost. A similar sizing exercise needs to take place for new applications provisioned in the cloud. But as time goes by, the conditions under which your applications are running may change:

- Workload intensity will vary due to seasonality in the business

- Changes in business processes impact the demand for services

- Resources were allocated based on incorrect assumptions and need to be corrected

Constantly evaluating and right-sizing the allocated resources or even turning off dormant resources can have a major impact on you overall cloud expenditure.

15. Refactor for elasticity

To fully benefit from the on-demand capabilities of public cloud where you only pay for what you use, applications need to be designed with that in mind. The single most important aspect is splitting up monolithic applications into a number of micro-services instead that exchange data through APIs. This allows you to add and retract nodes of capacity on the fly to deal with changes in demand.

Refactoring existing applications can take considerable effort and is not always possible from the start. But considering the cost saving potential over time, you should evaluate and plan for an overhaul of all migrated workloads eventually.

16. Automation to reduce administrative overhead

Public cloud services are designed to simplify automation of tasks, including tactical optimization efforts like continuous right-sizing, shutdown during inactivity, and decommissioning of dormant resources. A mature set of automated processes will reduce administrative overhead and produce more predictable results.

17. Automated scaling to meet demand

All public cloud services offer mechanisms to automatically increase or reduce the number of active instances based on certain triggering events or thresholds. The idea is to provide a service that can autonomically adjust to changes in demand. At a fundamental level, the cloud cost saving potential of such a mechanism would be considerable. But in real life, it can be quite challenging to set and tune the upper and lower limits of those thresholds. It takes a high level of understanding of each application to get it right, and incorrect settings can result in decreased efficiency or increased service risk.

The efficiency and applicability of automated scaling depends on to what extent the applications have been designed or refactored to use a cloud optimized architecture. If it hasn’t, automated scaling will have limited effect.

Looking for a tool that can help you do cloud cost optimization?

30% of hybrid IT spend is wasted. You know it.

You just don't know which 30%. We do.

Scheduled a demo of VCM to learn how you can identify and eliminate wasted hybrid IT spend and assure performance.